[Firstly, this is a big one, and it sprawls pretty quick. You might want to bring a snack and call your loved ones before taking it on. It is also overflowing with spoilers. I shall try not to spoil anything in the first few words of any paragraph, so if you can be bothered to read on, just skip past whatever texts you don’t want to see mangled through the lens of my wholly subjective nitpicking.]

IMAGE: A Tearaway Selfie (Media Molecule)

Phase the First: Wherein I Try To Get All Self-Reflexive And Fail

It’s always foolish to try to sum up an entire year as being ‘about’ one thing.

People do it all the time, of course. Articles get written. Cheesy montages get rolled out in news broadcasts. YouTube even compiled a ‘What Did 2013 Say’ clip (presumably alongside its weekly ‘Most viewed cats falling into sinks’ list). Whenever an untrammelled January rolls around everybody gets lost in a wave of nostalgia that invariably leads to a lot of tortured attempts to squeeze the newly concluded year into a neatly digestible oneness. Usually this is achieved by referencing some pithy term or title that’s seen to capture the whole. The preceding twelve months are suddenly labelled the year of the ‘Twerk’, or the year of the ‘hashtag’, or the year of ‘the Doctor’ (I want to go on record as saying that last one is completely legitimate)*, and once this revisionist summary is offered, everyone nods, files the year away, and prepares to watch the whole cycle unfold again.

Yes, it can too often be merely cheap pabulum used to fill up slow news days as the holidays descend, or the arrogance of a commentator presumptive enough to try and force their subjective experience of the world down the throat of their audience, but it remains the product a larger imaginative exercise at the heart of our communal experience.

See, we humans like to categorise, to segment. We make lists, we put things in conceptual boxes. It’s why we have terms like ‘This thing is the new black…’ or ‘This thing is the best thing since sliced bread…’ (which has really never seemed that gigantic a leap in design innovation to me, but whatever). Millennia ago we decided to start subdividing the inexorable passage of our mortal lives into incremental beats.

We invented calendars, seasons, and years, and seconds. We called this process ‘time’, and it helped us put things into all sorts of useful explanatory categories: socially we had the ‘Renaissance’, the ‘Dark Ages’, the ‘Roaring Twenties’; privately we had our ‘tweens’, our ‘mid-life crises’, our ‘golden years’.

(Later we would even invent a magazine that we also decided to call ‘TIME’, and even it started getting nostalgic and naming people ‘Person of the Year.’ See? We can’t help our little selves…)

Everything had a label, everything had a place, and these classifications helped explain one period’s relationship to everything else in the continuum: Romanticism was a reaction to the Industrial Revolution; the paranoid anti-government sensibility of The X-Files gave way to the pro-security, cowboy morality of 24; the Teletubbies and that freaky glowing baby head in the sun gave way to whatever tweaked out cocktail of amphetamines conjured Yo Gabba Gabba.

But despite being a natural impulse of our communal efforts to wrangle a rational shape onto the indifferent, chaotic maelstrom of the world around us, it is still foolish to presume that any period in time can be one thing. Indeed, it’s asinine to think that the multitudinous panoply of human experience – a miasma of social, political, and ideological concurrence, each impacting upon one another in incomprehensibly complex, intricate ways – could ever be reduced to some pithy catchphrase, wrapped up with a trite little bow.

You’d have to be an idiot, so drunk on your own arrogance that you were wilfully blind to reality, ripe for embarrassment and derision…

…And can you imagine if that clown tried to publish such a redundant retrospective in February?

Ha. Ha Ha Haaaaaa…

Ha.

So anyway:

2013 was all about the Selfie.

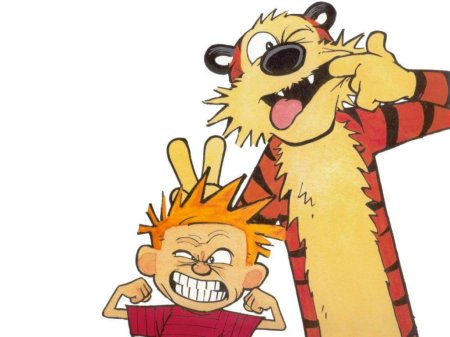

IMAGE: The exquisite Calvin and Hobbes ‘selfie’ by Bill Waterson

Phase the Second: Wherein I Explain Myself – While Taking Petty Pot Shots At Celebrities I Will Never Meet

I mean, I must be right, no?

After all, if I was to use the most hackneyed tactic of the lazy debater, I would just jump straight to a dictionary definition – and this was the year that the Oxford Dictionary declared ‘Selfie’ (the act of taking a photograph of oneself and uploading it to social media) as their word of the year.** According to Oxford, given the ubiquity of the practice (visible in the popularity of websites such as Instagram and Vine) and the explosion of usage for the word itself (which they claim has risen 17,000% in the span of one year) ‘Selfie’ best encapsulates the cultural zeitgeist.

(It is also the dictionary definition of the unspeakable horror haunting the dreams of anyone subjected to yet another one of Geraldo Rivera’s bids to put his job description in perpetual inverted commas).

So, as summaries of 2013 go, I think it’s an entirely fitting choice – though not, perhaps, for the reasons that might at first spring to mind.

Sure, if one chooses to view it uncharitably, the word can appear to be a searing indictment of a culture descending into narcissistic excess. In a year in which Miley Cyrus followed the same tired routine of nearly every pop starlet before her and tried to ‘rebrand’ herself as a sexualised adult in the most predictably derivative way possible (Twerking! Naked video clips! Tongue photos! …Can anybody even get through reading the words ‘Miley Cyrus’ and ‘controversy’ without having to stifle a yawn anymore?), in a year where Justin Bieber adamantly hoped that Anne Frank would have been a rabid fan of his, and Shia LaBeouf disappeared up his own …ego, revealed to be a egomaniacally deluded serial plagiarist, it may seem that the word ‘Selfie’ is a fitting label for a culture too concerned with celebrating the vain and self-involved; a society so obsessed with itself that simply the act of existing, possessing a face, and having the capacity to sign up to a social media account, is enough to warrant celebration.

But dig deeper than this rudimentary cynicism, and the act of taking a ‘Selfie’ offers a far more fitting metaphor for the state of contemporary culture…

After all, this is a year in which the western world has been in a constant interrogation of the nature self-hood; a year in which our news, our entertainment, our politics, all meditated upon the notions of privacy, individuality, and identity as arguably never before. 2013, it turns out, was posing for a ‘selfie’, and the result, as we uploaded it to our facebook accounts (which had just removed the option to make your account ‘Private’ in its search engine) was a spray of contradictory emoticons that are quite revealing to explore…

Phase the Third: Wherein I flatter myself to think that the NSA would give even half a damn about this blog

In the news, identity – it’s mutability, it’s sanctity, its currency – was repeatedly at the forefront of many of the stories that dominated the headlines.***

The year was littered with bizarre stories of pseudonyms and squabbles over the ‘true’ identity of some of the world’s most prominent figures. Whereas in most years it would be difficult to know what to do with the information that the ‘real’ Richard III had just been discovered under a car park, or to make sense of why the western world should stop to observe the otherwise unremarkable birth of a healthy baby boy (a boy who, before his fontanels have even closed, had the weight of a wholly ceremonial British aristocracy placed upon his shoulders), but in 2013, it all seemed to make a deranged sense: this was a year obsessed with identity; about who you were and where you were and what (if anything) that meant.

The year began by exposing the ease with which identity can be fabricated.

In January, Manti Te’o, football player for Notre Dame, was revealed to have had an entirely fictional girlfriend. Te’o had played an heroic year of football, seemingly in the shade of the death of both his grandmother and his girlfriend, Lennay Kekua, on the same day. It was believed that Kekua had ‘died’ the previous year, having suffered complications from her leukaemia – but was revealed later to have all been another ugly example of someone being ‘catfished’. To take Te’o ‘s account of the story after the deception was revealed, he had believed that he was dating a woman in a long distance relationship, but was shocked to later discover that she was actually just the product of an extended prank being pulled by a man named Ronaiah Tuiasopo.

The revelation created all manner of embarrassment and confusion, but what this strange incident best illustrated – as people tried to pick through the contradictory details that had appeared in the public record over the past year – was the way in which real and fictional people had become inextricably blurred in the media’s account of Te’o’s rise to prominence. Indeed, whatever Te’o knew of the deception, the way in which the media, in their hunger for myth-making pathos, helped calcify a false identity into ‘truth’ was something quite extraordinary – some biographical articles had even romantically described the details of their first flirtatious meeting, where apparently, against all conceivable logic, they locked eyes, and were drawn into the gravity of each other’s gaze.

Although under very different circumstances, ex-Congressman Anthony Weiner also found his bid for New York mayor scuttled by a personal controversy rooted in false identity. Attempting to return to politics after being disgraced two years earlier by the revelation of his salacious online proclivities (is that the most round-about way ever to say that he was sending people photographs of his penis?), Weiner had been attempting to run for mayor under the pretence that he was a changed man, one who had made mistakes, sure, but who had learned from these failures and put them behind him. He was returning to public service more honest and self-disciplined. In truth, Weiner had continued to engage in multiple texting affairs, and when this conflict in his image was exposed, it was in the form of a whole other identity: the alias ‘Carlos Danger’. Weiner did continue on in one of the most weirdly antagonistic, sometimes petulant runs for office ever – getting into verbal confrontations with voters, mocking reporters for their accents, flipping people the bird after his concession speech – but the cognitive dissonance between the identity that he wanted to present to the world, and the one that he shared with people over the internet (the one that he had seemingly named after watching a poorly dubbed Mexican telenovella) proved too great, and his chances at victory evaporated.

On a far more serious note, this year saw the return of one of the most heinous cases of ‘mistaken identity’ in recent history. In June, the shooting of Trayvon Martin, an unarmed African American teen, by George Zimmerman, a self-appointed ‘neighbourhood watchman’, returned to the headlines when Zimmerman was brought to trial (the incident itself had occurred the previous year). Zimmerman was eventually acquitted of all charges brought against him, with Florida’s ‘Stand Your Ground’ laws cited as a primary reason for the not guilty verdict, and the outrage in response to this apparent racial discrimination (seemingly Martin could be suspected of being a criminal, be stalked, accused, and then killed, because he committed the ‘crime’ of wearing a hoodie while black) erupted again. The prejudice that Martin had suffered, both at the hands of Zimmerman and in some parts of the media in the aftermath of the shooting (semi-conscious moustache-hanger Geraldo Rivera, stated that Martin’s decision to wear a hoodie was as much to blame as Zimmerman for the incident), was seen to be a grim reflection of the experience many African Americans and minorities still face in contemporary society. The protestor’s rallying cry ‘We Are Trayvon Martin’ (and later ‘We Are Not Trayvon Martin’) therefore became both a reclamation of identity and a potent statement on the universal suffering caused by bigotry.

IMAGE: US Protests Over Trayvon Martin Verdict (Reuters)

As the year drew to a close, squabbles over identity, and how best to categorise a person’s life continued on, often in the most asinine of ways. The passing of Nelson Mandela was met with several critics bickering over whether he should be remembered as a beacon for hope, forgiveness and change, or as a ‘terrorist’; and not even Santa Claus was immune, dragged into incoherent disagreements over whether or not he was white. Fox News anchor Megyn Kelly took the time to indignantly insist to her audience that Santa was indeed Caucasian, thank you very much – no matter what a Slate article by Aisha Harris might have playfully mused. Kelly later claimed that she was trying to inject ‘humour’ into her broadcast (something one might argue is already impossibly redundant for a show on Fox), but her declaration that ‘for all you kids watching at home: Santa just is white – Santa is what he is‘ seemed far more spiteful and territorial than it did festive and jolly. (Although, to be fair, she makes most everything sound that way.)

Inarguably, the most glaring example of this new concern with identity surfaced at the midpoint of the year, however, with the revelation that the United State’s National Security Service was no longer only tasked with targeting potential security threats, but was methodically spying on foreign leaders (such as Germany’s Chancellor Angela Merkel) and routinely gathering the mass telephone, email and search data of all of its own citizens.

The scandal broke after Edward Snowden, a previous CIA employee contracted to the National Security Agency, became alarmed by what he was seeing at the NSA and decided to expose what he believed was a massive systemic overreach in their intelligence gathering operations. He gathered together sensitive documents, and taking a leave of absence, leaked them to the press, subsequently fleeing into hiding where his passport was revoked, he was charged with espionage, and was sought by the US government for extradition and trial.

The central program with which Snowden took issue was labelled ‘PRISM’ (because all of this didn’t sound enough like a Bond film plot already…) It was a system that gathered together into one database the user information and online content of any person who had any contact with the services of several major American companies such as Apple, Microsoft and Google.**** Emails, phone calls, Skype chats, browsing histories, documents, all were seemingly available for perusal; and given that the only requirement for accessing this private information was a ‘three-hop query’, which meant that it could monitor the information not only of a suspect, but of anyone who might have had contact with that suspect, and then anyone who might have had contact with them, and then anyone who might have had contact with them. It was like a Six Degrees of Kevin Bacon, only with less eighties nostalgia and more potential for invasive governmental overreach. (Also, it made that town from Footloose look positively anarchic by comparison.)

It was now possible for people to be implicated and scrutinised not because of who they were, or who they associated with, but because they might once have had contact with someone who knew someone who knew someone… Singular identity risked becoming so dispersed as to be meaningless at a time in which, ironically, a system had been designed to isolate the individual from the cacophony of the crowd.

In the process of bringing PRISM’s existence to light, Snowden’s own identity became currency as he traded obscurity for international notoriety. He effectively became a human Rorschach Blot in the process – a ‘hero’, a ‘traitor’, an ‘anarchist’, a ‘patriot’, all depending upon who was describing him. Meanwhile his revelations prompted a fierce, worldwide debate about the appropriate balance to strike between personal liberty and communal safety, between America’s proclamations of valuing freedom of speech and thought, and a potentially overriding duty to public safety.

The world has, of course, been witness to debates such as these in the past – the fallout from the McCarthy hearings and their hunt for communists being but one such example – but never before has the scope been so wide, nor the potential for personal invasion so absolute. Consequentially, Snowden’s revelations have sparked a philosophical quandary that continues to rage, and it is proving itself to be one that has far-reaching ramifications for the heretofore uncharted landscape of cyber identity in a borderless digital age.

IMAGE: Edward Snowden (The Guardian)

Phase the Fourth: Wherein I Talk About Authorship …Sort Of

In 2013 the world of entertainment was likewise obsessed with identity at every level of the communicative chain: characters scrutinised their selfhood as never before, authors used the truth of themselves as another narrative tool, even audiences were compelled to consider their own place in the way texts make their meaning.

Perhaps most notably, the year saw the unveiling of a new generation of videogame consoles. One of these new platforms however was almost sabotaged by issues of identity before it had even launched.

There were many nails in the coffin of Microsoft’s original tone deaf and aggressive design policies for their new Xbox One – when the phrase ‘#dealwithit‘ is inextricably linked with your product you can probably surmise that there is a corrosive disconnect between company and consumer – so to select one blunder amongst their cavalcade of PR missteps made would be all but impossible. Certainly one of the most publicised though was the ‘always on’ requirement of the Kinect peripheral. Microsoft were demanding that people who wanted to buy their console had to also purchase the Kinect (it came bundled with the machine), a camera and microphone attachment that remained constantly connected to the internet, that was capable of reading intricate body behaviour and recognising speech, and which the owner was never allowed to switch off, cover, or disconnect from Microsoft’s servers.

No doubt convinced that they could weather the birthing pains of entertainment’s shift toward all-digital media (or so they thought), Microsoft were insisting upon this intrusive requirement (amongst numerous others), because they knew that identity itself is profitable. After all, regulating the sale of a game to an individual’s nametag stood to make far more money than allowing that person to own the game without restriction (to sell or pass it on to others); being able to monitor how many people were in a room about to watch a downloaded new-release movie had a potential for further revenue; collecting data on each individual member of your audience’s entertainment habits allowed the dashboard advertising to be more effectively targeted to their specific interests. Identity was currency – a guaranteed future earner after the initial sale; and by better understanding who their audience were (and retaining complete control over what and how they consume), they stood to be far more profitable.

But coming as it did in the immediate wake of the NSA spying scandal, amidst accusations that several prominent companies – including Microsoft – were willingly supplying the Prism program with information, to many this promise of compulsory intrusion into one’s private space seemed rather distasteful. The expressionless, unblinking eye of the Kinect suddenly became the symbol of a rally against the company’s other proposed draconian policy changes: the licensing rather than the ownership of games; the alienation of the indie market; the requirement to always play online; the inability to lend or re-sell games; the start button demanding that you to sacrifice three kittens to a golden altar of the Master Chief’s head, etc.

IMAGE: Xbox One Kinect Peripheral: ‘Look Consumers, I can see you’re really upset about this. I honestly think you ought to sit down calmly, take a stress pill, and think things over.’

Thrust into the public’s consciousness, the Kinect and the Xbox One became a referendum on the right to preserve one’s identity (their gaming and viewing habits, their personal information, and even their personal space) from corporate exploitation, and Microsoft watched as it was voted on by consumers in real time – even before the point of purchase. Pre-order sales floundered, pre-release press turned sour, and when Microsoft’s only reply to the concerns of its audience was to have the (not surprisingly now replaced) head of their Xbox division, Don Mattrick, petulantly tell them that they had to either except these impositions or just keep buying their old last-generation machine the mood became even darker.

Eventually, when it was clear that their closest competition, Sony, was romping away with victory, simply by treating their customers as adults who could decide for themselves how to use the products they owned (ironic considering Sony’s own controversy with a similar, but less invasive issue on the PS3), Microsoft was forced to walk back every one of their policies in response to the ‘candid feedback’ of their fans.

In a different way, identity was also at the heart of several of the year’s biggest literary scandals. In Australia, two award-winning poets, Andrew Slattery and Graham Nunn, were revealed to be serial plagiarists, rather shamelessly trying to elevate their own name by stealing from the work of others. Only after their works had been revealed to be Frankenstein’s monsters of unattributed, verbatim quotation did either of them attempt to explain them as pieces of ‘pastiche’ – until that moment they were happy enough for people to read it as the sole product of their singular imagination and labour. Thankfully, the exposure turned out to be the puncturing of some rather inflated egos rather than a validation of their eleventh hour claims of uncredited ‘homage’.

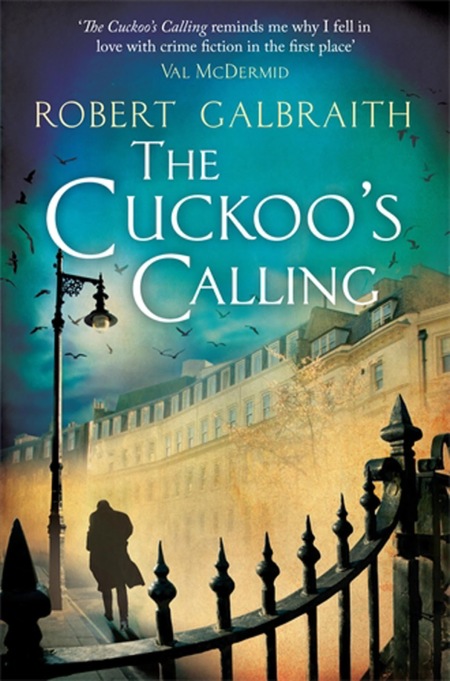

In the world of popular fiction, an anonymous tip off to a reporter exposed J.K. Rowling as the real author behind the nom de plume ‘Robert Galbraith’, author of The Cuckoo’s Calling. Although anyone’s sceptical spider sense would have suspected that this leak came from the publishing company itself, who stood to make millions in the fallout – alongside the poetic symmetry of the book being titled after a ‘cuckoo’, a bird that deceptively lays its eggs in another bird’s nest – the outing was subsequently revealed as a slip up with lawyers.

IMAGE: The Cuckoo’s Calling cover (Little, Brown & Company)

The fact that Rowling had a pseudonym was ultimately nothing surprising. Having penned the blockbuster Harry Potter series, the author faced a daunting level of expectation and scrutiny for any future projects (just as her first post-Potter book, A Casual Vacancy, had received), so shedding her identity must have been enormously freeing. The book could live or die on its own merits – or at least be read fairly by the lowered expectations. Unfortunately for Rowling, what happened next was not very surprising either…

‘Robert Galbraith’ had been introduced to the world as a retired military police investigator who had decided to pen his first fictional novel, and the result was a critically well received but moderately selling work of crime genre fiction. Whatever his next book was going to be was looked forward to with a small but growing anticipation. However, once Rowling’s name was in the mix, not-surprisingly, the work blew up. Stock ran out, reprints flew into production. A novel that had done little more than be commended as an admirably solid first attempt was suddenly a sensation, with readers combing through the pages looking for clues and grammatical tells.

Whether or not Rowling’s intention was to reveal Galbraith’s true identity in future (again: ‘cuckoo‘), for now it was torpedoed, and the curiously tenuous interrelationship between audience and author was vividly exposed. Rowling’s masquerade had allowed her a restorative creative refuge that enabled her to speak to the reader in a wholly unique, more intimate way, with no preconceptions or expectations weighing down their discourse. With that mask stripped away, the cache of her name proved enormously successful for the sales of the book and garnered her plaudits from those who had been duped, but it ultimately undermined her intent. The story is by no means a tragedy (again, she was commended for her skill, and her publishers are hardly crying), but it was a curious reminder of how an artist can feel trapped by their own public image, and how success can be so enmeshed with personality.

On a smaller scale (by in my opinion far more tragic), one of my personal favourite podcasts, Yeah, It’s That Bad, was seemingly undone by the need to preserve their anonymity. Yeah, It’s That Bad was a marvellously improbable product, one that consistently defied expectation in order to create something fantastically enjoyable, and, ironically for a show built around the premise of reviewing bad movies, refreshingly unique.***** Indeed, if you were tasked with writing down a list of all of the most clichéd elements that any derivative amateur podcast always seems to contain, what you would end up with is a bare bones description of what Yeah, It’s That Bad, in essence, turned out to be: A bunch of guys (check), sitting around together talking about a bad movie (check), reviewing what they had just seen while cracking jokes (check). And yet…

What elevated Yeah… (besides its brisk editing and deceptively high production value) was the hosts’ appealing chemistry. Joel, Martin and Kevin each had distinct personality, and had clearly known each other for years, giving them a natural rapport that was inviting rather than alienating. Unlike the innumerable other pale imitations that littered the field of crappy-film-reviews, they weren’t simply reading off pre-written gags, no one was calling-in on a temperamental Skype connection; they were three people, sitting around a table, involved in a conversation – one that was brightened by their quick wit, penchant for exaggeration, and ability to build upon each others’ observations. There was no pretention, no forced guffaws, and they treated both their subject matter and their audience with respect.

In contrast to a podcast like How Did This Get Made? which begins with the presumption that the film being watched is garbage and thus a cheap punching bag, the hosts of Yeah… all clearly shared a genuine love of art and film (and the pleasures of a cheesy film done right), and were legitimately interested in debating whether the material they had viewed was unjustly maligned. Consequentially, amongst the jocularity, there was thoughtful discussion of narrative conventions and cinematic pitfalls, the diminishing returns of anodyne sequels, the scourge of the Mary Sue, problems with pacing and characterisation – their analysis of the film Sucker Punch (a piece of cinema that I found grotesque) remains one of the most interesting and considered that I have yet encountered.

But best of all for those who decided to follow these three on their journey, Joel, Martin and Kevin understood radio as a theatre of the mind, and knew how to propel and expand upon a comedic riff without tipping over into lazy catchphrase. By the time the show was brought to its premature end the ‘Yeah It’s That Bad Headquarters’ was said to be an orbital satellite circling Earth, Dennis Quaid, doyen of contemptuously wooden acting, was the patron saint of a swollen congregation of actors who phoned in their performances having barely wiped the craft services lunch from their mouths, the Beef-O-Meter was a meticulously calibrated gauge of an actor’s hotness (the Rock almost broke the scale), Joel’s never-ending quest to ‘follow the money’ was reaping damning results, and the Twilight films were one mumbled, dead-eyed Kristen Stewart performance away from killing them all.

It was an adaptive production, one that evolved with the needs of its audience and the benefit of the discussion (even the podcast’s name and premise weren’t locked in for the first handful of shows as they found their rhythm). They took fan requests, they invited feedback, they grew and honed and streamlined; but the one feature that they maintained, that ultimately turned into their Achilles heel, was their anonymity. It was never a secret that they were using fake names – indeed, it was repeatedly cited, without fanfare. They weren’t industry insiders, or famous faces, or gossips with dirt to dish, they were just three friends producing a free program, who weren’t interested in becoming famous if it meant impacting upon their daily life.

The story was left necessarily vague (and I freely admit that the following account may be riddled with inaccuracies), but for those who followed the drama in the show’s final weeks, it was heavily implied that an online blogger had discovered who the three leads of the podcast actually were, and was going to reveal their names to the world. Why anyone would want to know this completely irrelevant information, or what it’s exposure would even achieve, was left a complete mystery. Those already familiar with the program had no interest in who these people ‘really’ were; those unfamiliar would care even less. The trio therefore appealed to the blogger not reveal their identities, but apparently the idea that someone would not welcome fame was too much to comprehend, and the blogger intended to do so anyway.

But their anonymity wasn’t a bluff. It wasn’t some playful game that they were inviting their audience to participate in uncovering. Even in such a whimsical and mischievous format their privacy was a necessity – ironically, it allowed them to be more open with their fans, to carve out a space in which they could speak honestly and engage freely without impact upon their occupations or personal lives. So the damage was done. One blogger’s desire to publish a scoop that no one wanted, revealing identities that were irrelevant anyway, destroyed the very thing that they were misguidedly trying to intrude upon. And with that, identity was shown to once again have a price – even for a free podcast.

IMAGE: Yeah It’s That Bad fan art by ‘Dan’

Phase the Fifth: Wherein I Get Pissy With Man of Steel Yet Again

The content of much of 2013’s entertainments seemed obsessed with identity too, exploring and overanalysing humanity’s sense of self. Iconic characters were scrutinised, reintroduced, redefined. Famous figures were repeatedly dismantled, separated into their constituent parts, and reconstructed. It was a year of origins, and tales of stripping characters down to their core, the results of which were sometimes highly profitable, at other times incoherent trash.

Lara Croft spent the beginning of the year being re-birthed into the world in Square Enix’s Tomb Raider reboot, a bombastic origin story (which, despite a few issues, I enjoyed a great deal, actually) that had her both physically and metaphorically doing battle with the weight of her already established legend. Alongside the shift in genre – from the straight 3D puzzle platforming of the old to the rollicking, sometimes horrifying, survival action of the new – a design that literally has the player participate in growing her skills up from rudimentary quick-time-events into the assertive, capable adventurer that we remember – the story seems to play out a meta-narrative of fighting against the weight of Lara’s past as a videogame idol.

IMAGE: Tomb Raider (Square Enix)

In the fiction, Lara is stranded on an island in which a tribe of homicidal worshipers are devoted to an ancient demigoddess that they are trying to revive in a new body. As a metaphor for the foreseeable backlash of fans who wanted a straightforward remake of the old game, the imagery is particularly potent. This crazed armada of zealots (seriously: despite this island being presented as a mixture of LOST and Gilligan’s Island it is like Spring Break for unhinged sociopaths) are trying to wholesale resurrect the idealised female figure that they adore – but as Lara exhibits, that creature no longer belongs in this fiction. Instead, literally fighting her way out of the shadow of that history, Lara manifests the franchise’s new female protagonist: a resourceful, plucky, weathered young warrior, eager to do a bit of archaeology if people will stop trying to bury a hatchet in her face for five minutes.

When she stabs the reanimated statue of the demon that would seek to reclaim this world, it explodes in an eruption of pixels, the sun only then breaking through the cloud cover to restore life to the land. Lara’s existential quest of self-discovery is finally at an end. The ethereal power and beauty of the dead queen is never disputed, but the act of clinging to her memory so slavishly is shown to result only in stagnation, disappointment and decay. Lara sloughs off the expectation of the old to resurface as something familiar, but new. What exactly that turns out to be awaits to be seen in future instalments, but for now I am certainly looking forward to following the journey.

(I should also briefly clarify: I am in no way exaggerating when I use the word ‘re-birth‘ in describing this reintroduction of Lara Croft. Replay that opening sequence in which Lara has to scramble and claw her way out of a cave that is convulsing and collapsing around her, only to emerge into the world wet, and crying, and blood-smeared, and the game creator’s intent to show how ‘A survivor is born‘ becomes quite (perhaps rather too) overt.)

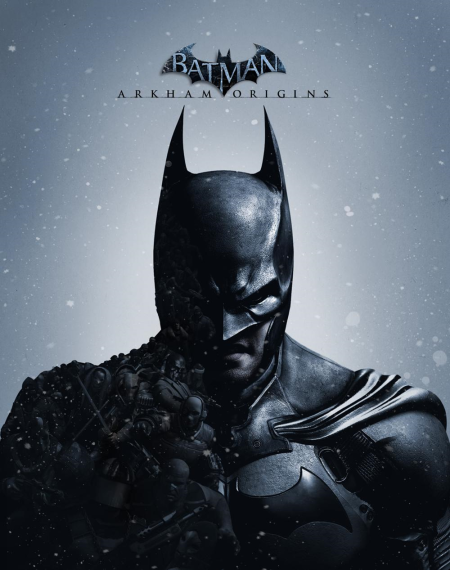

Batman: Arkham Origins, as the name implies, likewise tried to embrace the possibilities of a prequel – although a cynic might suggest this was more an attempt to disguise a filler entry into the franchise by a B-team of coders, rather than a crucial addition to the overarching narrative. Proving to be by no means a bad game – the foundations upon which it was built are too strong – it’s narrative does seem a little overstuffed with first meetings and introductions, attempting to cram the seedlings of an entire mythos into the span of a single evening gauntlet.

IMAGE: Batman Arkham Origins (Warner Bros. Games Montreal)

This promise to dig into the core of Batman’s identity was so central to the game’s theme that even its advertising slogan was intent on calling it out. ‘Your enemies will define you’, it declared – a potentially dangerous gambit for a narrative is so riddled (not a pun) with players from Batman’s B and C level rogues gallery. Clearly this was actually a reference to the predictable reveal of the narrative’s actual big bad, and the establishment of their yingy yangy brand of co-dependent mental instability, but until that moment, Firefly, Copperhead and Black Mask are some pretty weak tea that don’t say much of the man behind the cowl. …But again, perhaps that was ultimately the point. Until Batman’s true antagonist emerged he was just going through the motions.

In cinemas, Man of Steel – one of the most divisive pieces of mass market entertainment of the year – was an attempt to likewise re-establish an icon, to explore the identity of the ‘man’ behind the legend of Superman. …I say ‘attempt’, of course, because all it ultimately managed to offer was Zack Snyder’s biggest budget version of the same tediously adolescent nihilistic torture porn he has been reproducing ad nauseam throughout his career. The fact that he managed to turn one of fiction’s most hopeful, inspirational figures into a mopey, selfish, irresponsible manchild, with an unchecked messiah complex, is so grotesque that (if it appeared in any way that he’d done it intentionally) it might almost be interesting; but the sycophantic way that Snyder depicts the perennially idiotic people of Earth unconditionally loving our new alien overlord, despite his wanton destruction, despite his psychotic mood swings, despite becoming an unapologetic law unto himself, makes the whole film crumble into a lazy, emotionless void of themeless, characterless carnage.

…I did not enjoy the film. You probably couldn’t tell.

Iron Man 3 (which, going by the reaction on the internet, I alone on Earth seem to have liked), bucked the prequel/reboot trend to actually advance a plot, but even it did so by still choosing to break down the character of Tony Stark (yes, in a way a little too reminiscent of Skyfall …and The Dark Knight Rises …and The Avengers …and The Care Bear Movie, probably), and rediscover the man beneath the suit (…or the several hundred progressively inferior suits, as the case may be). It even flashed back to the years before Tony had learned to take responsibility for his actions, fashioning a proto-antagonist, apparently of his own making, that he had to overcome in the present to reclaim his life.

IMAGE: Iron Man 3 (Marvel/Disney)

No doubt the year’s most baffling attempt to explore this theme of selfhood came (predictably) in the form of a misguided film adaptation of a classic novel. The Great Gatsby, a story that gnaws at the impossible fantasy of ever knowing the truth of another human being – what motivates them, what drives their every action, even in spite of themselves – was turned into a fidgety music video that mostly chewed the scenery and hyperbolically bloated every moment of subtly that gave the original work such lean, haunting grace. Instead of a melancholy character’s reflection upon a defining, if inexplicable time in his personal history, we had Nick Carraway going insane and desperately writing the book from within an asylum. Because that adds… well… absolutely nothing, besides being mawkish and stupid. But hell, why not? We’re already filming in cinema’s most pointless 3D, with dance routines that feel like acid trips, and a whole recreation of Long Island that looks like a surreal day dream slapped together by the work-experience kid at Industrial Light and Magic – so go nuts.

IMAGE: The Great Gatsby (Warner Bros.)

It’s a great shame, though, because if director Baz Luhrmann had not turned the narrative into a shallow cartoon, it could have been a chillingly prescient summation of the themes of identity, presumption and self-delusion that have echoed throughout this year. Had the film managed to capture the glistening nostalgia of Gatsby’s unattainable dream, or the suave facade that obscured his ineffable truths, it could have had much to say. Instead all it exhibited was how hollow a film can become when its creators repeat the same mistakes its characters do: Gatsby puts on a big display to get Daisy’s attention and consequentially gets chewed up in the maelstrom of her and her husband’s vapid recklessness; Luhrmann, mistaking spectacle for substance, does much the same, overburdening his work with gaudy tricks and distractions that eventually smother its central, sober conceit.

‘So we beat on, boats against the current, borne back ceaselessly into the past.’

Well he was right about one thing. The audience certainly felt beaten.

In the land of television, show runners, like filmmakers, seemed obsessed with returning to the formative years of familiar characters, reintroducing them in unfamiliar contexts under the presumption that this interrogation of their genesis would somehow reveal something new. Hannibal invited viewers to experience the reptilian grace and gastronomical proclivities of Hannibal Lecter before he got all orange jumpsuit-y in Silence of the Lambs (and yes, I know that Red Dragon was a prequel too). Meanwhile, anyone who ever wondered what Norman Bates got up to in the years before his mother became the world’s most judgemental rocking-corpse could watch Bates Motel and live out the excitement of seeing an awkward pubescent boy turn inexorably into a sex-crazed sociopath (arguably something most already are).

Dracula tried to recast fiction’s most flamboyant, dead-eyed bloodsucker (no, not Robert Pattison; the other one) into a newly industrialising London, deciding that the best way to capture the inconceivable menace of a character who necessarily remains in the shadows of a novel shrewd enough to reveal him only in glimpses and half-truths, was to slap him in the centre of a serialised melodrama that revolved around him, that attempted to explain his motivations, and that stripped him of his portentous obscurity.

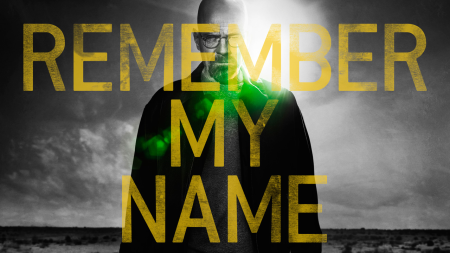

Almost certainly the year’s biggest television event however (aside from a certain rouge wedding), was the conclusion of Breaking Bad, a show that offered one of the most compelling, absorbing depictions of a human journey descent into moral compromise and abject evil. Vince Gilligan’s Faustian descent was so deeply invested in questioning its protagonist’s fractured identity, and the consequence of his incremental conciliations, that it ran to its conclusion with the focal character’s darkly ironic demand ‘Remember my name’ resounding through every scene. Whether anything really was left of Walter White beneath the overwhelming monstrosity of ‘Heisenberg’ haunted the show’s final episodes. Was White still the man he believed himself to be? Was he the sum of his crimes? Are we our intent or our action? Are we what we hope to be, or the legacy others write for us?

IMAGE: Breaking Bad (AMC)

Phase the Sixth: Wherein I Explain What This Tedious ‘Phase’ Conceit Is All About

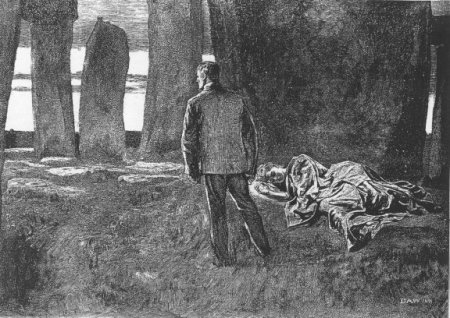

At the end of Thomas Hardy’s Tess of the d’Urbervilles (spoiler warning for a century-old novel), the heroine Tess has been killed – seemingly sacrificed to the whim of a hostile universe that has finished its sport with her. Meanwhile, her lover, Angel Clare, is ironically punished by the author for his earlier abandonment of Tess with the suggestion that he will now go on to marry her younger sister, having promised Tess in her final moments that he would do so. Initially it seems like a strange penalty. Earlier in the book Angel had been mortified that the young, virginal beauty he had fallen in love with, having been the victim of a sexual assault, had already borne and lost a child, so in theory he has now been ‘rewarded’ with exactly what he desired: a younger virginal beauty, more divine than even her deceased sibling. Indeed, Liza-Lu is described at this point as:

‘a tall budding creature – half girl, half woman – a spiritualised image of Tess, slighter than she, but with the same beautiful eyes.’ (p.488)

But the clear, lamentable truth that hangs over this conclusion is that of course Tess cannot be so easily replaced, cannot be substituted. Clare gets what he once wanted, but it will forever be the most vivid reminder of the irreplaceable individual he once callously rejected.

It’s an absence symbolised by the description of Liza-Lu’s unsullied perfection. Tess, in contrast to her sister, was flawed, marked by a physical blemish that once enraptured Clare. Earlier in the novel, when he first becomes smitten with Tess, she is described thus:

‘How very lovable her face was to him. Yet there was nothing ethereal about it; all was real vitality, real warmth, real incarnation. And it was in her mouth that this culminated. Eyes almost as deep and speaking he had seen before, and cheeks perhaps as fair; brows as arched, a chin and throat almost as shapely; her mouth he had seen nothing to equal on the face of the earth. To a young man with the least fire in him that little upward lift in the middle of her red top lip was distracting, infatuating, maddening. He had never before seen a woman’s lips and teeth which forced upon his mind with such persistent iteration the old Elizabethan simile of roses filled with snow. Perfect, he, as a lover, might have called them off-hand. But no — they were not perfect. And it was the touch of the imperfect upon the would-be perfect that gave the sweetness, because it was that which gave the humanity.'(pp.208-9)

Already a story fundamentally concerned with the nebulous nature of identity – Tess Durbeyfield’s life is tragically upended when it is believed that she is actually a descendant of the d’Urberville line of ancient knights – the conclusion of the novel reveals itself to be a condemnation of Angel’s earlier sanctimonious judgement. For Angel, Tess proved to be an idea – a fantasy upon which he could project his own longing. Upon discovering the truth beneath his pretty lie he fled, only later realising his mistake. Consequentially, the truth of that mistake will haunt him the rest of his life. He failed to see the woman beneath the image until it was too late.

Because we are all our ineffable faults and flaws and failures, all marked by our history. And the way we carry our imperfections define us – just as Tess, resolute, carried her maddeningly imperfect lip.

In this, the beginning of a new millennium, our popular culture seems to be depicting us all in a burgeoning state of adolescent self-awareness, already striving to learn the lesson that Angel Clare ignorantly missed. Whether a reaction to the fears of terrorism and global war that have hung over the 21st century, or a natural progression of our growth into a more immersive digital age, modern culture has seemingly reached a point of necessary personal reflection. We’ve turned the lens back upon ourselves, saturating the world with an onslaught of pop-cultural ‘selfies’. But it’s not quite the narcissistic act of self-aggrandisement that it might at first appear. Instead it is an attempt to try and make sense of ourselves and our circumstance, to define who we are and what we believe in, through introspection and self-analysis.

Sometimes it is as all-encompassing as dissecting the invasion of a clandestine global spy program; sometimes it is wondering why Batman never used those shock gloves again in the following games. We might be grinning inanely into a camera; protesting unjustifiable personal tragedy; playing with our audience’s expectations with a false persona; or dressing up our paranoias in superhero theatrics; in any instance, the questions remain universal: it is a meditation upon who and where we are, and what all of that means going forward.

What are those indefinable imperfections that give us our humanity, and how can we best preserve them in the daunting, unknowable age still to come?

IMAGE: Tess of the d’Urbervilles illustration by D.A. Wehrschmidt

* On the plus side, previous, artificial attempts to name the year (such as ‘The year of Luigi’) are excoriated by the reality of lived experience (revealing instead ‘The year of people-are-still-releasing-stuff-on-the-Wii-U?’) …Sorry. That was a cheap shot. I still love you, Nintendo.

** Apparently the origin of the word ‘Selfie’ is Australian, having been traced back to an Australian forum post (in which a young man took a photo of some damage he had done to his face while extremely drunk) in 2002. As the Oxford summary states, we ‘Strayans do like to add ‘-ie’ to the ends of words – barbie, cossie, sickie, freebie, pressie, symbolic interactionismie (okay, that one’s less popular). So let’s all take a moment to acknowledge that we have a young, drunk ‘Aussie’ to thank for this year’s expansion of the English language. You’re welcome. …Now please forgive us for Baz Luhmann.

*** And yes, I said ‘Headlines’, so let’s just take it as read that I am going to be shamefully skipping many of the most tragic and genuinely significant global events, such as the ongoing conflict in Syria and the massacres in Egypt – after all, that is sadly what the news media far too frequently seems to do.

**** It was also the year that Katy Perry released an album called Prism, which caused a different kind of rightful public outcry. I’m being facetious, of course, but just so that it doesn’t seem like I’m taking a lazy pot shot at a recording artist that bores me desperately (I am), here’s a fun bit of trivia to justify the snark: Perry’s new record was literally banned here in Australia. Not because of the content of the music (that would require there to be content – BAM!), but because she had woven living seeds into the album’s paper sleeve. The idea, I believe, was that you plant the album and it would grow into flowers. Unfortunately for Perry, the flowers are listed as a biohazard here in Australia (so are her lyrics – BAM BAM!), meaning that listeners can’t take her advice and bury her latest album underground (no matter how much they may want to after listening to it – And it’s a Hat Trick!) …I want to take this opportunity to apologise to any Katy Perry fans reading. I really don’t know what just got into me.

***** You can read a lovely elegiac summary of Yeah It’s That Bad that catalogues its hosts, its format, and its demise here. Vale Yeah It’s That Bad. In a world of weak weak weak men, you did good.